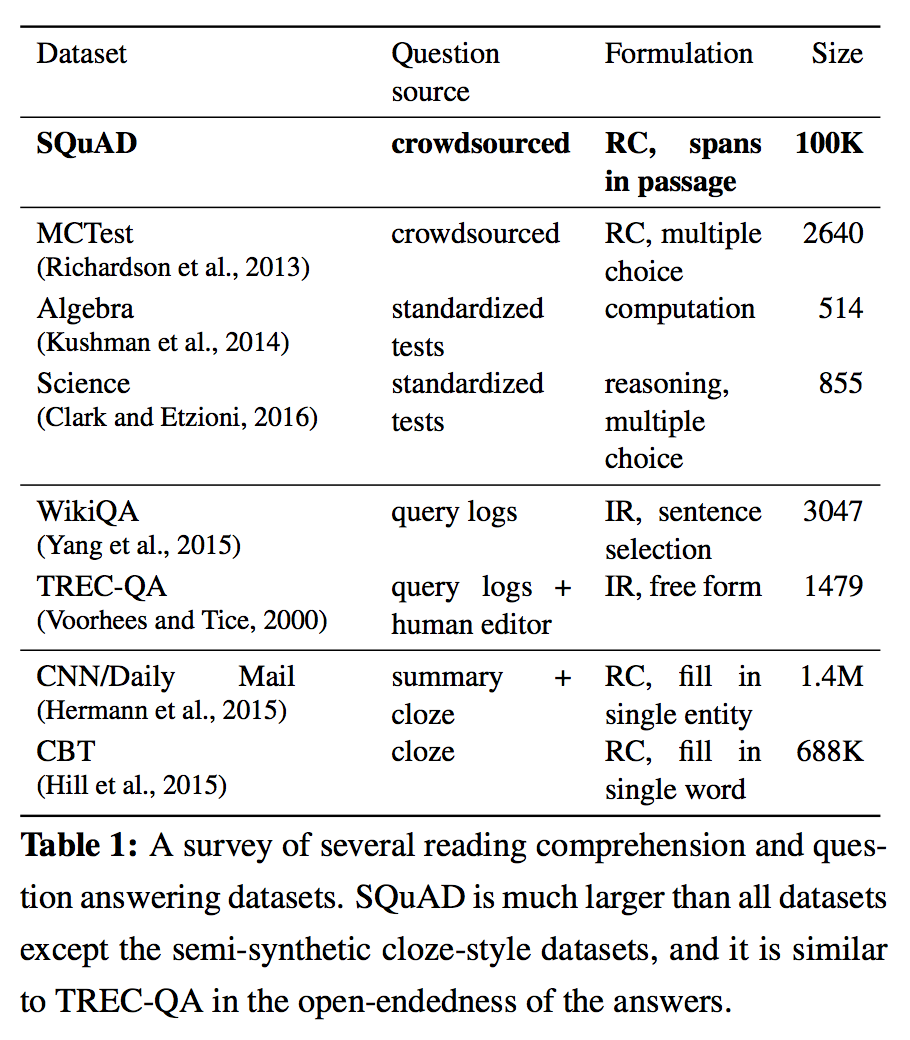

SQuAD

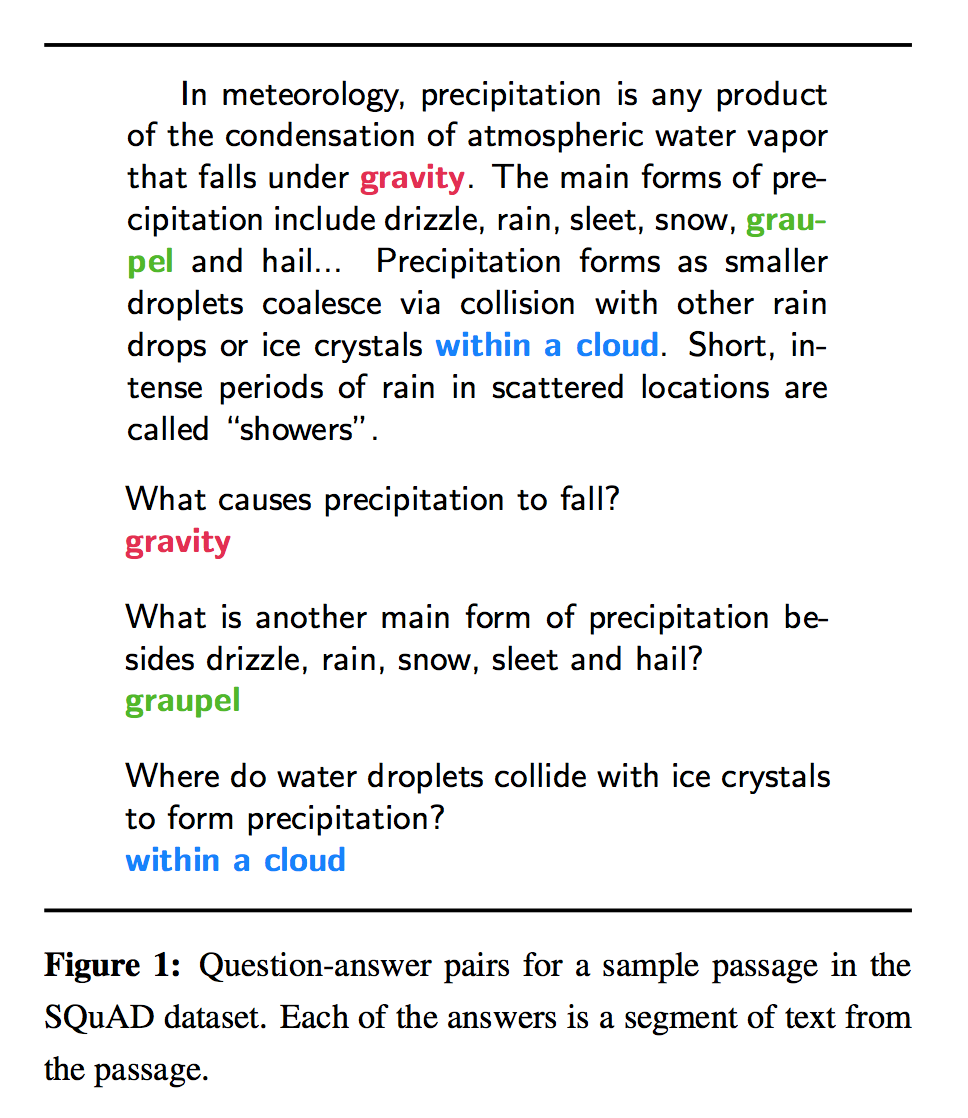

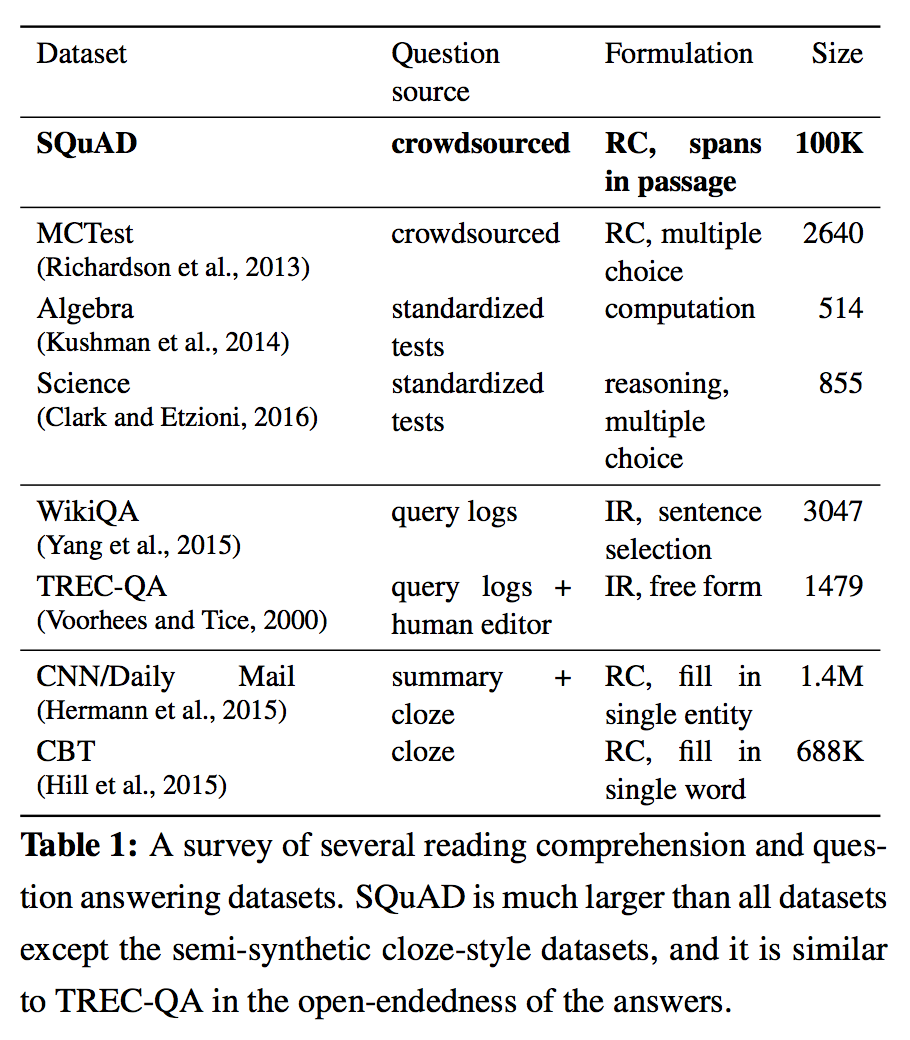

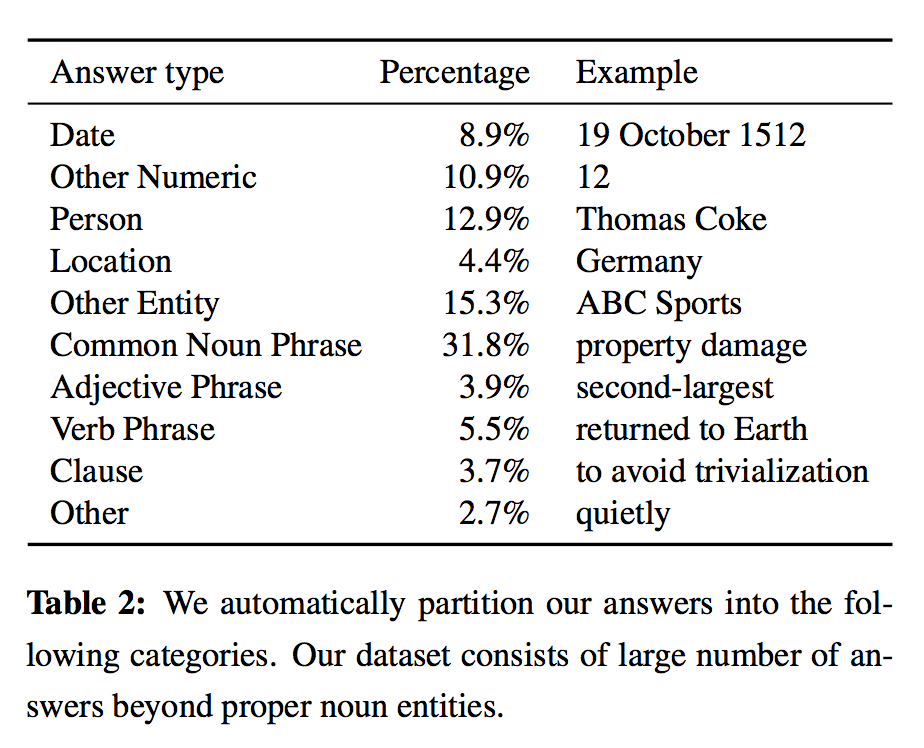

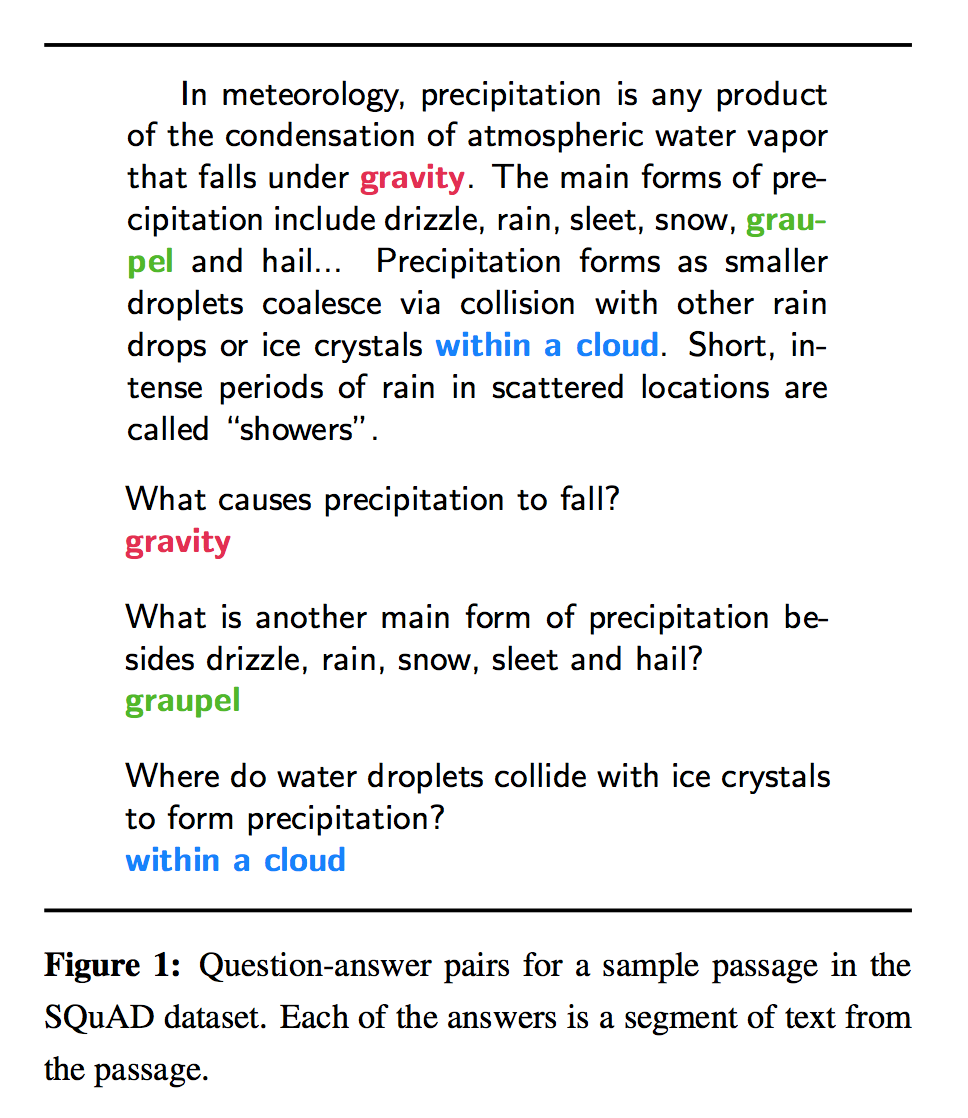

Task

Address the need for a large and high-quality reading comprehension dataset.

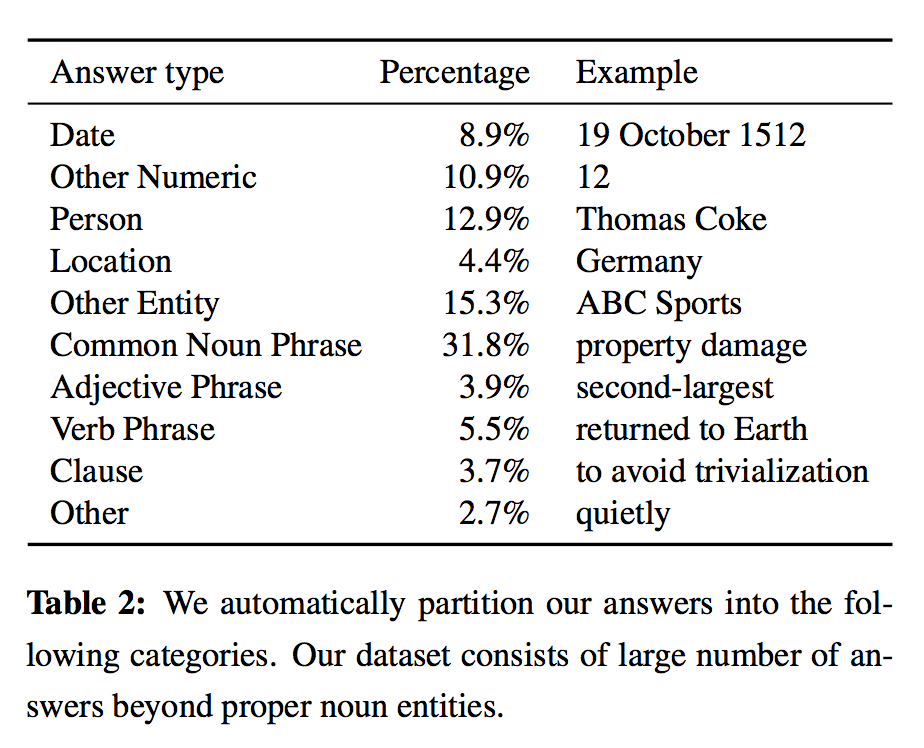

Features

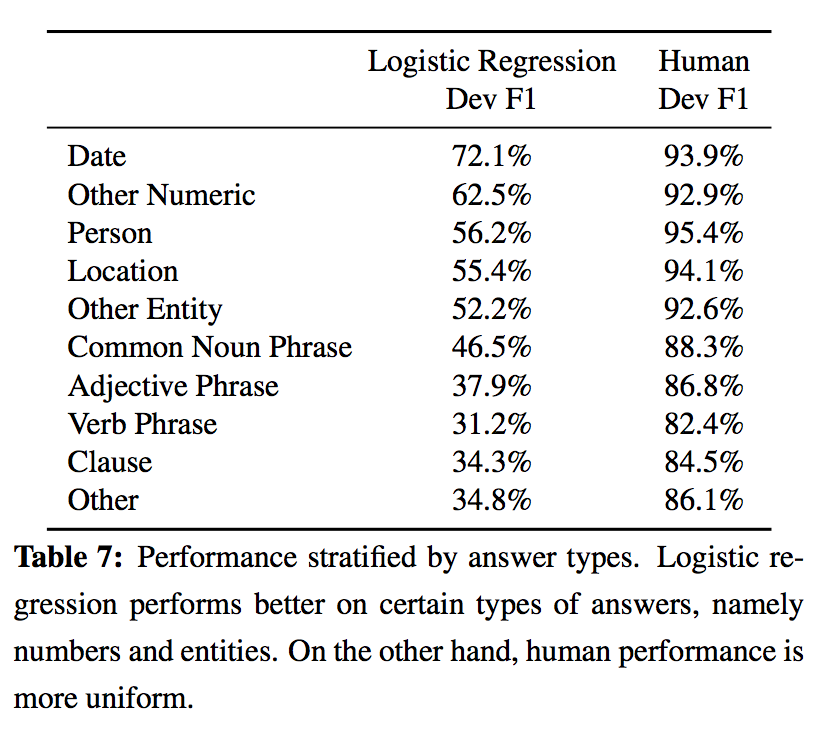

- SQuAD focuses on questions whose answers are entailed by the passage.

- requires selecting a spe- cific span in the sentence.

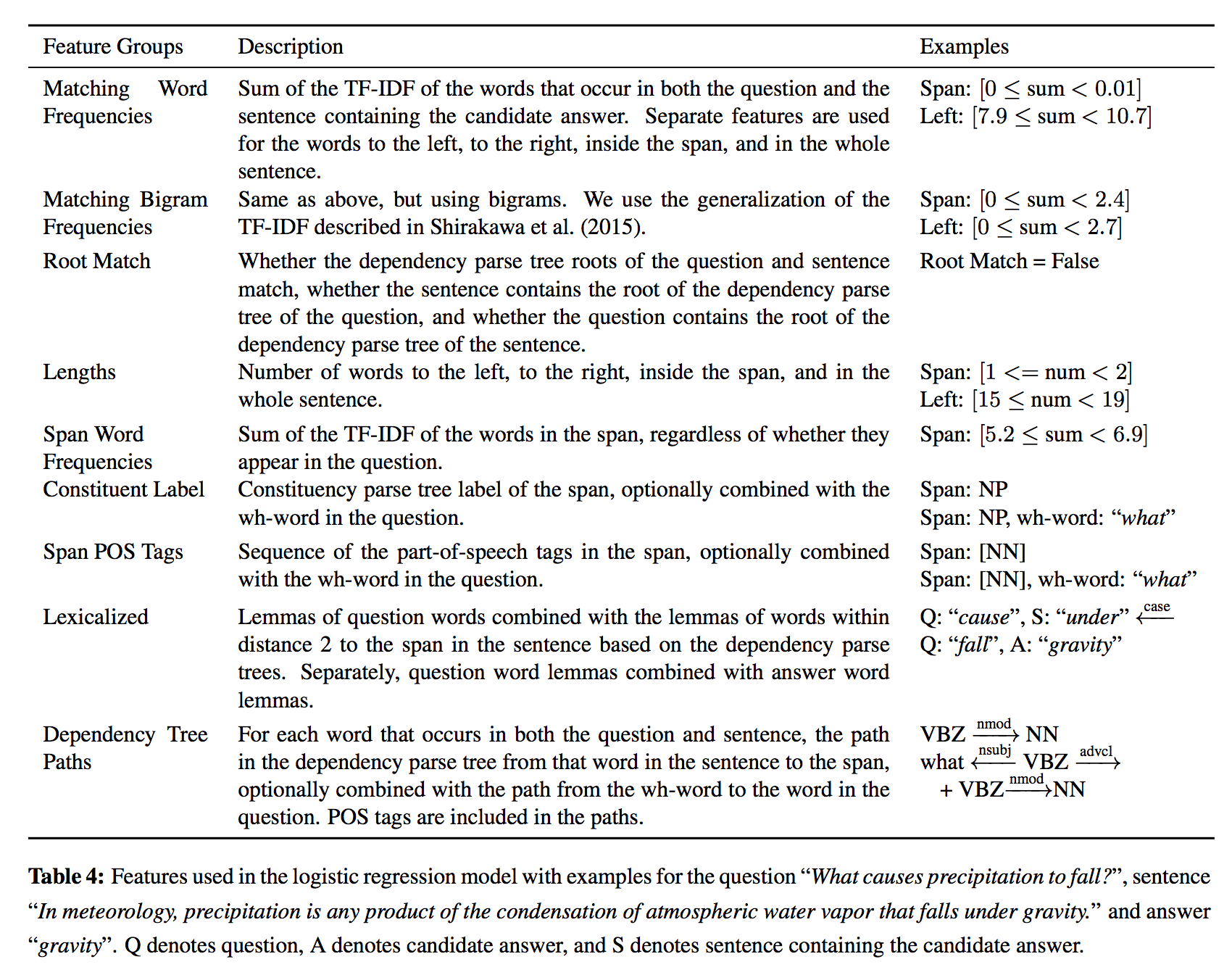

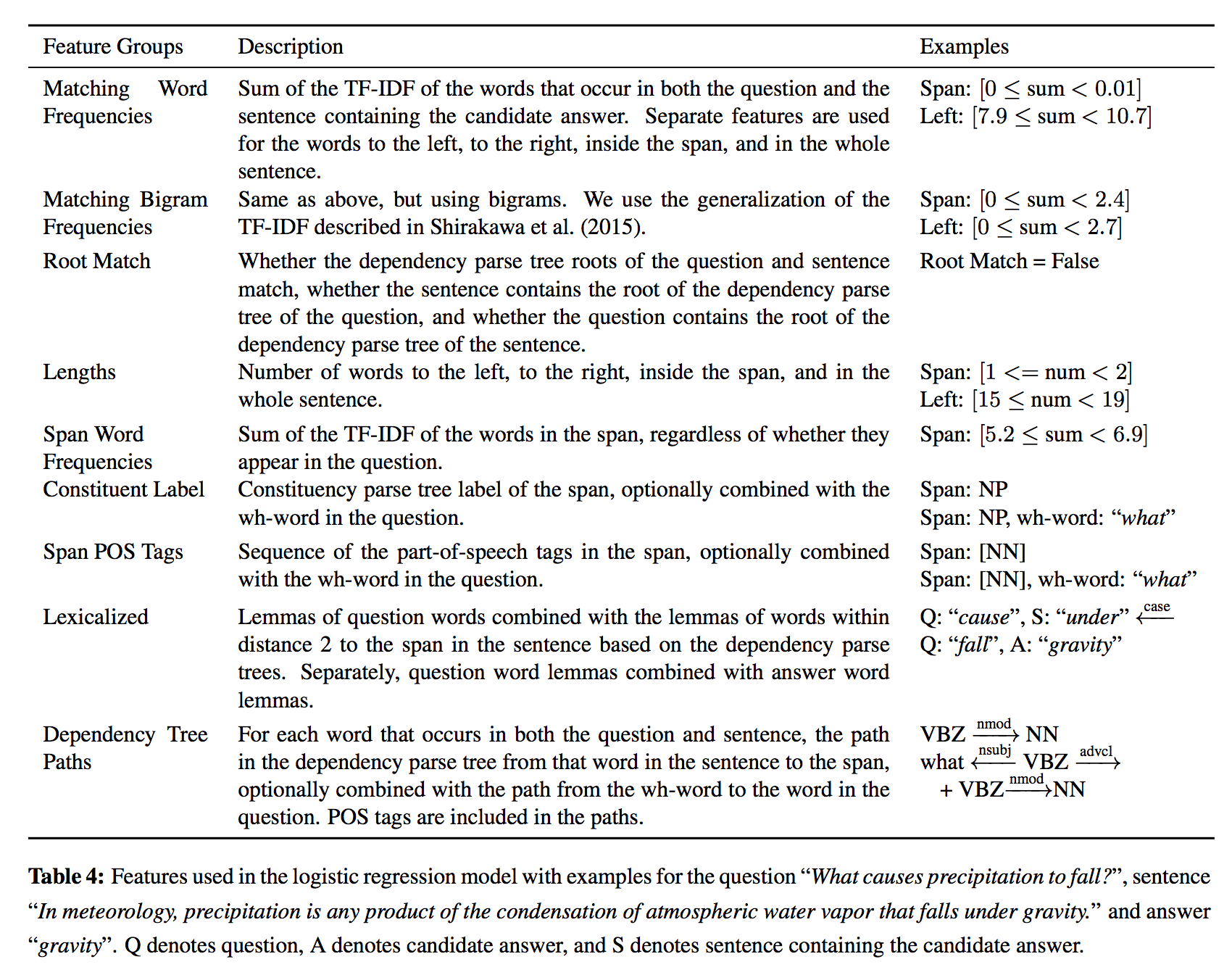

Baseline

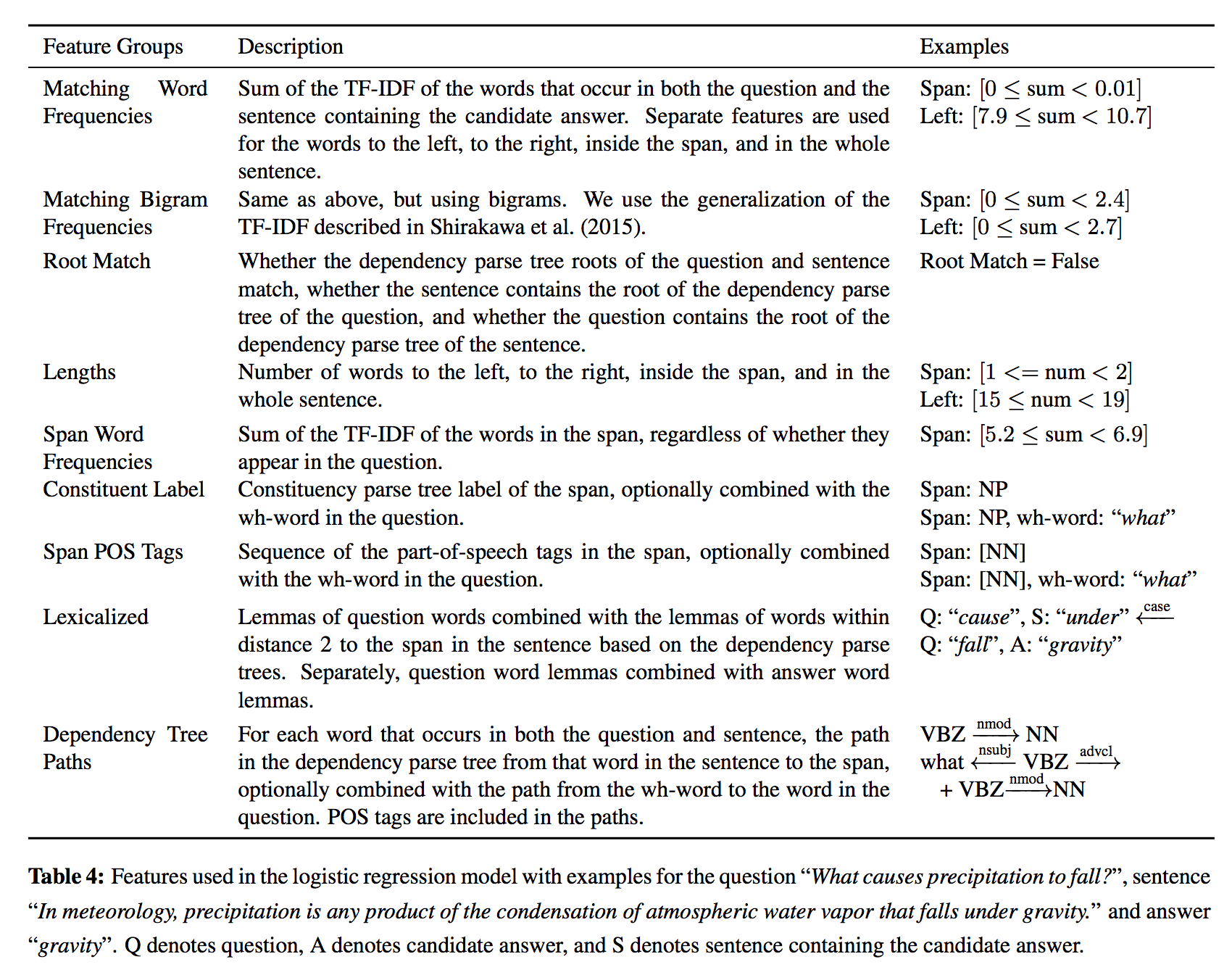

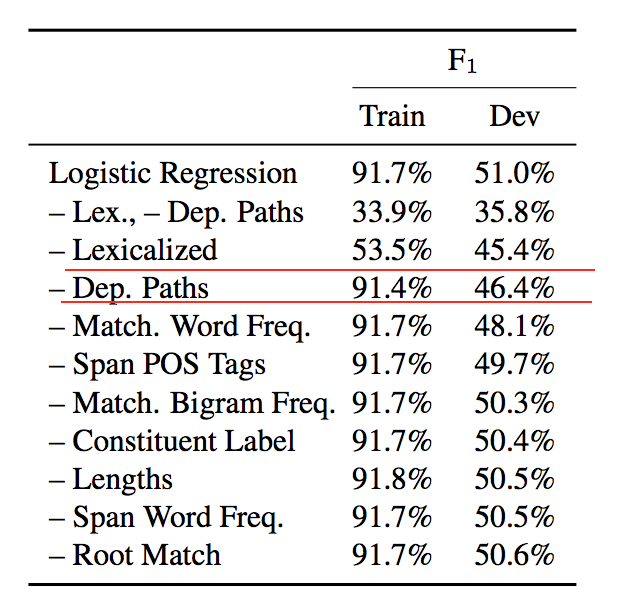

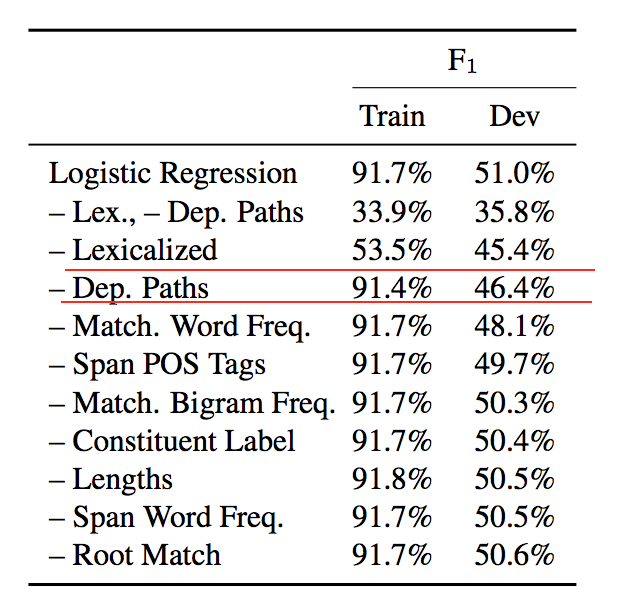

- lr features

Address the need for a large and high-quality reading comprehension dataset.

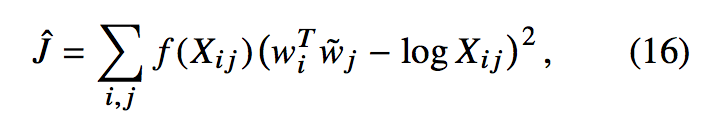

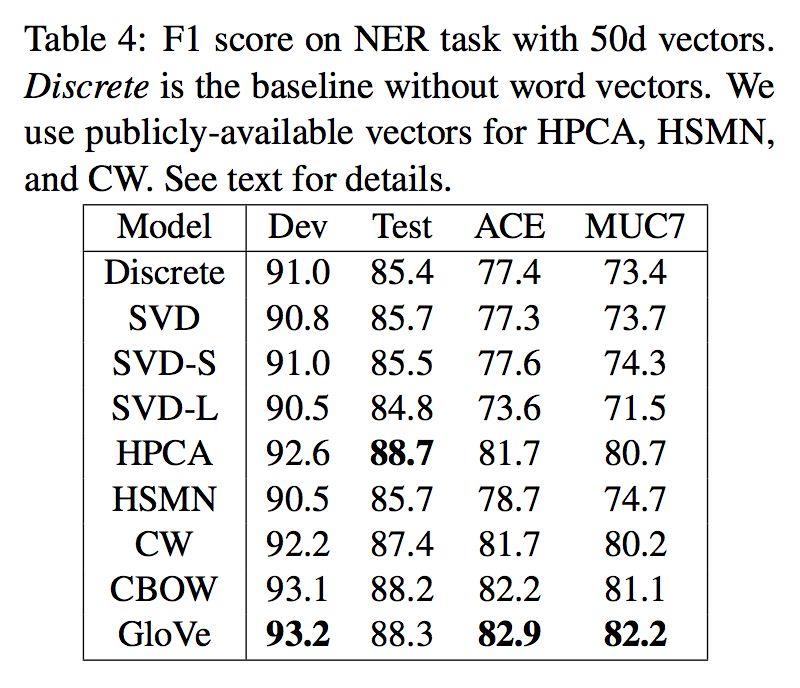

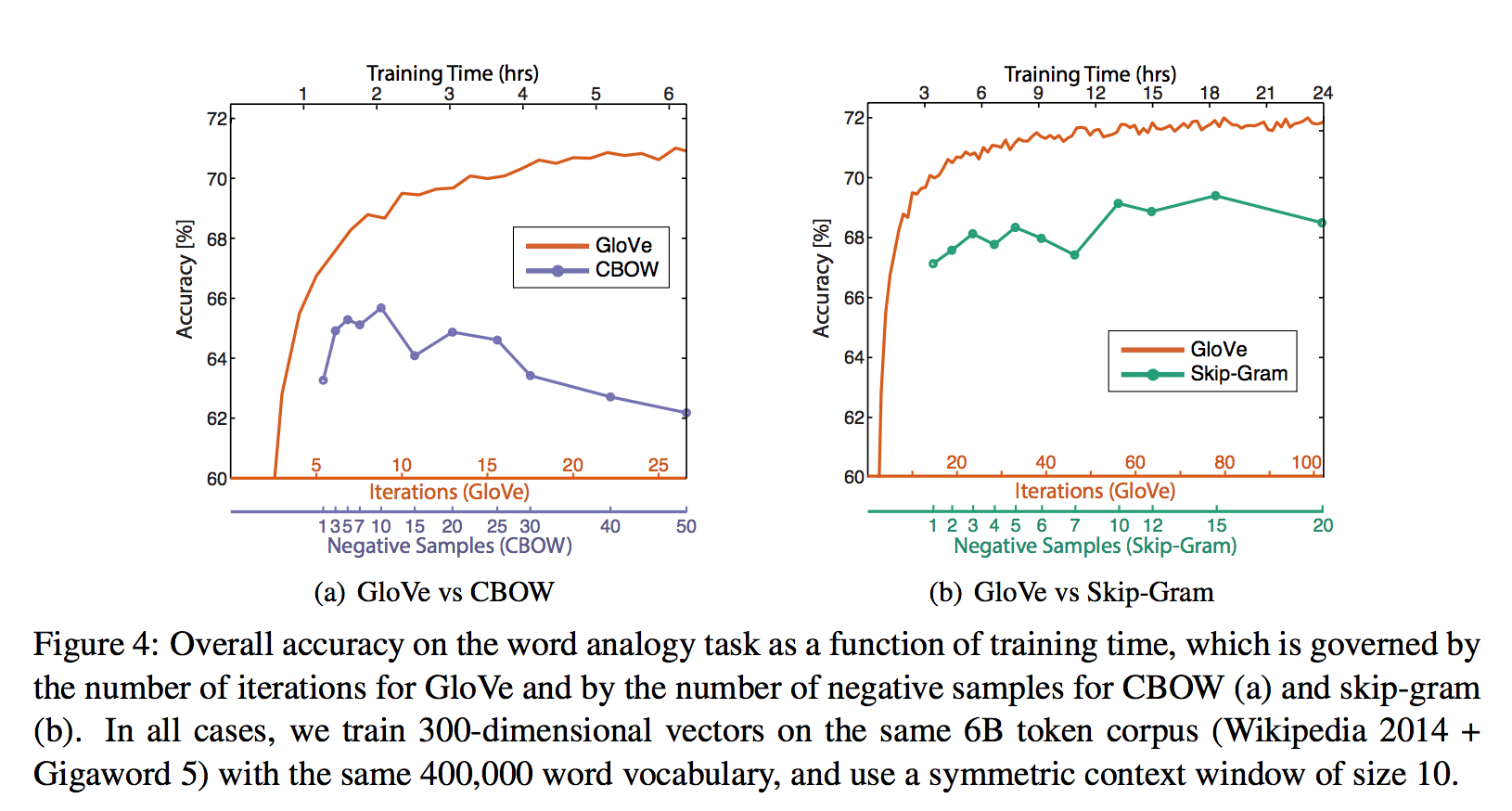

Improve word representation by a global logbilinear regression model that combining combining advantages of global matrix factorization(global context of word co-occurence) methods and local context window methods.

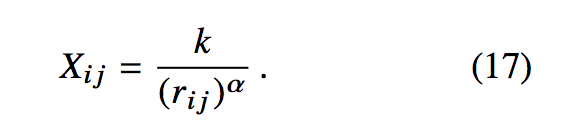

where Xij is the co-occurence matrix with i index for one word, and j index for another word.

f is a weighted function help address co-occurences equally problem.

see more detail evolution of the cost function derivation from section 3.

For the corpa studied within the paper, author give α=1.25. Therefore the model complexity is |X| = O(|C|0.8) compared to online window based methods which scale like O(|C|).

For the corpa studied within the paper, author give α=1.25. Therefore the model complexity is |X| = O(|C|0.8) compared to online window based methods which scale like O(|C|).

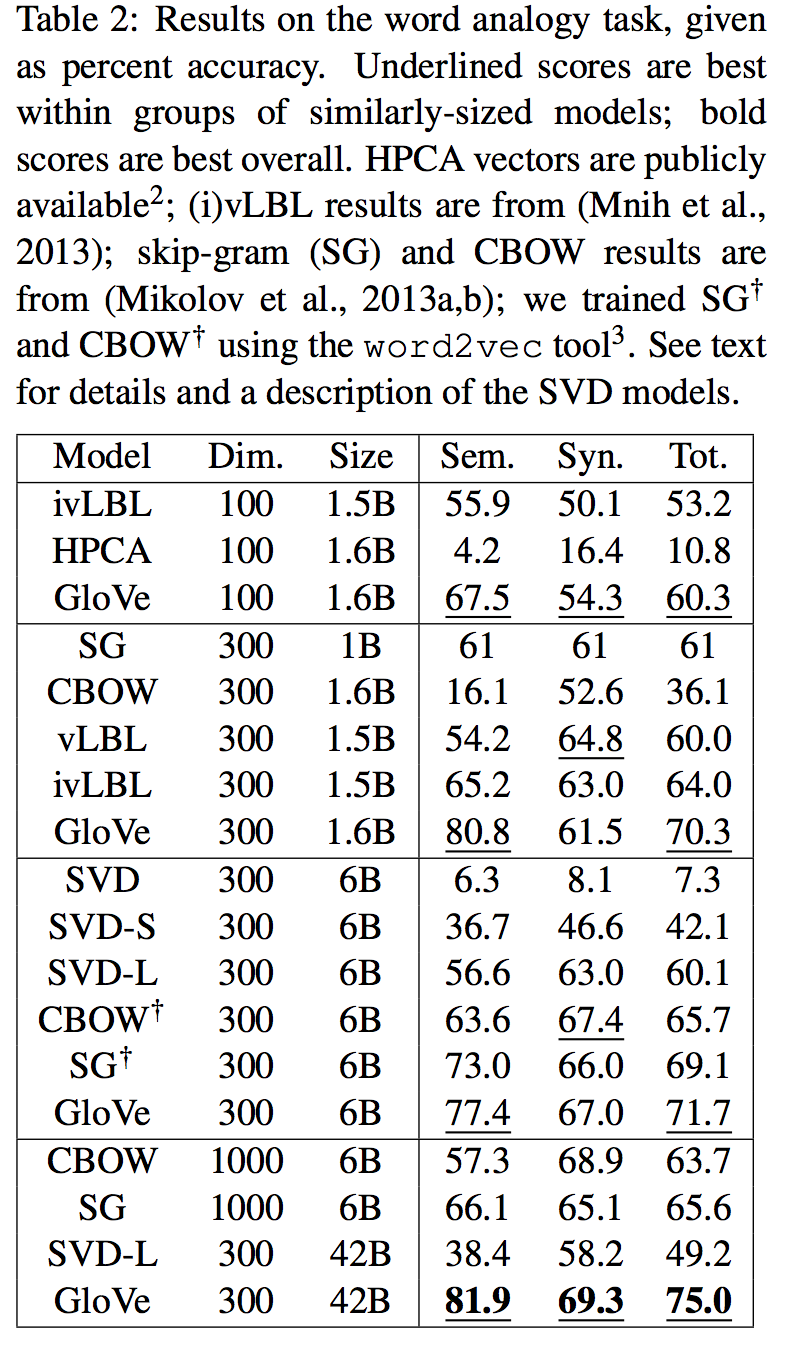

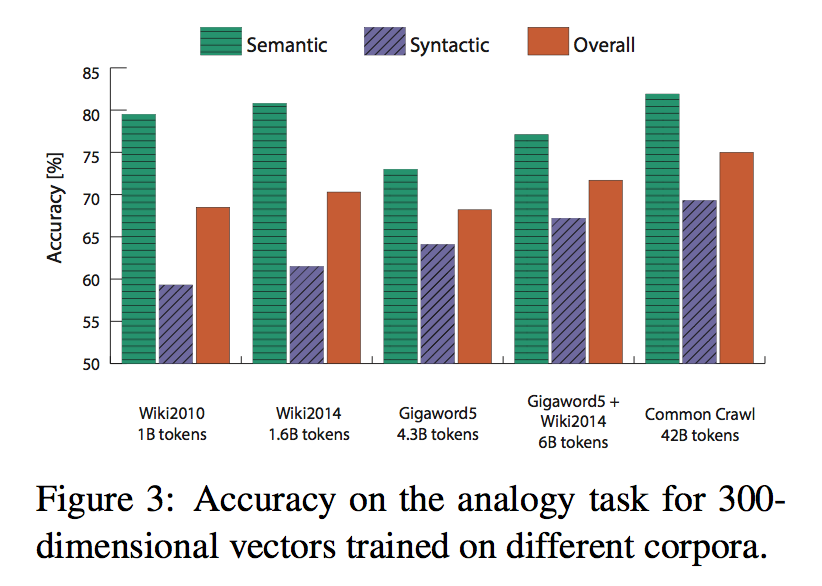

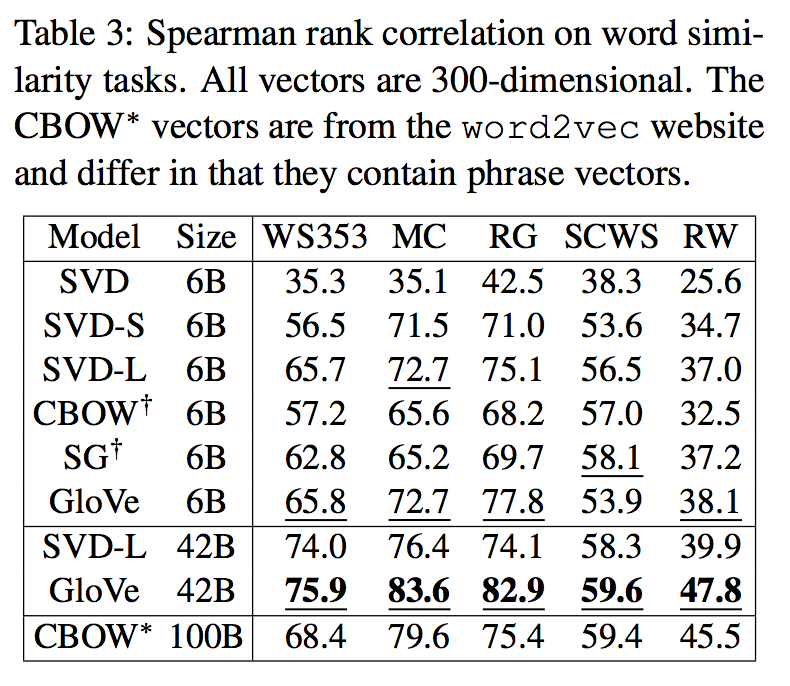

five corpora of varying sizes: a 2010 Wikipedia dump with 1 billion to- kens; a 2014 Wikipedia dump with 1.6 billion to- kens; Gigaword 5 which has 4.3 billion tokens; the combination Gigaword5 + Wikipedia2014, which has 6 billion tokens; and on 42 billion tokens of web data, from Common Crawl5. We tokenize and lowercase each corpus with the Stanford to- kenizer, build a vocabulary of the 400,000 most frequent words6, and then construct a matrix of co- occurrence counts X.

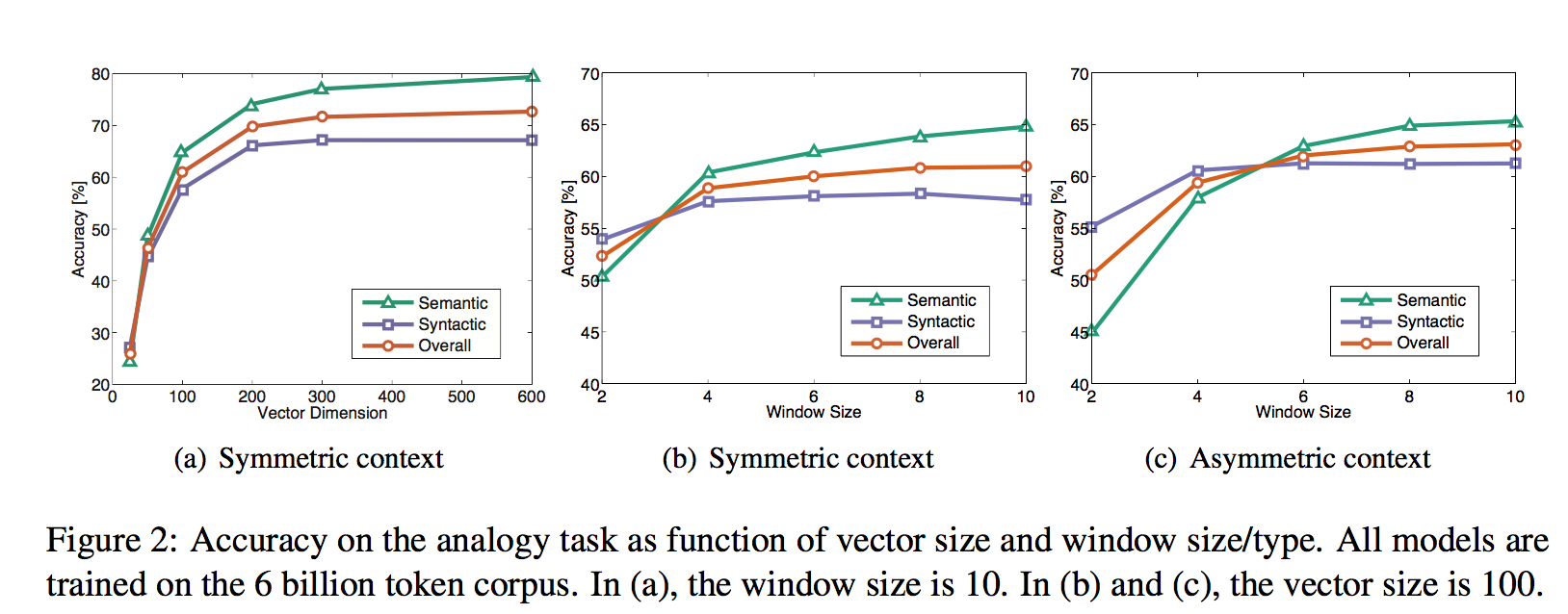

For all our experiments, we set xmax = 100, α = 3/4, and train the model using AdaGrad (Duchi et al., 2011), stochastically sampling non- zero elements from X, with initial learning rate of 0.05. We run 50 iterations for vectors smaller than 300 dimensions, and 100 iterations otherwise (see Section 4.6 for more details about the convergence rate). Unless otherwise noted, we use a context of ten words to the left and ten words to the right.

The model generates two sets of word vectors, W and W ̃. When X is symmetric,W and W ̃are equivalent and differ only as a result of their ran- dom initializations; the two sets of vectors should perform equivalently. On the other hand, there is evidence that for certain types of neural networks, training multiple instances of the network and then combining the results can help reduce overfitting and noise and generally improve results (Ciresan et al., 2012). With this in mind, we choose to use the sum W + W ̃ as our word vectors. Doing so typ- ically gives a small boost in performance, with the biggest increase in the semantic analogy task.

semantic questions [Athens is to Greece as Berlin is to___]

and syntactic questions[dance is to dancing as fly is to __]

we can see a huge advantages of GloVe on semantic captures.

Neural Enquirer: Learning to Query Tables with Natural Language

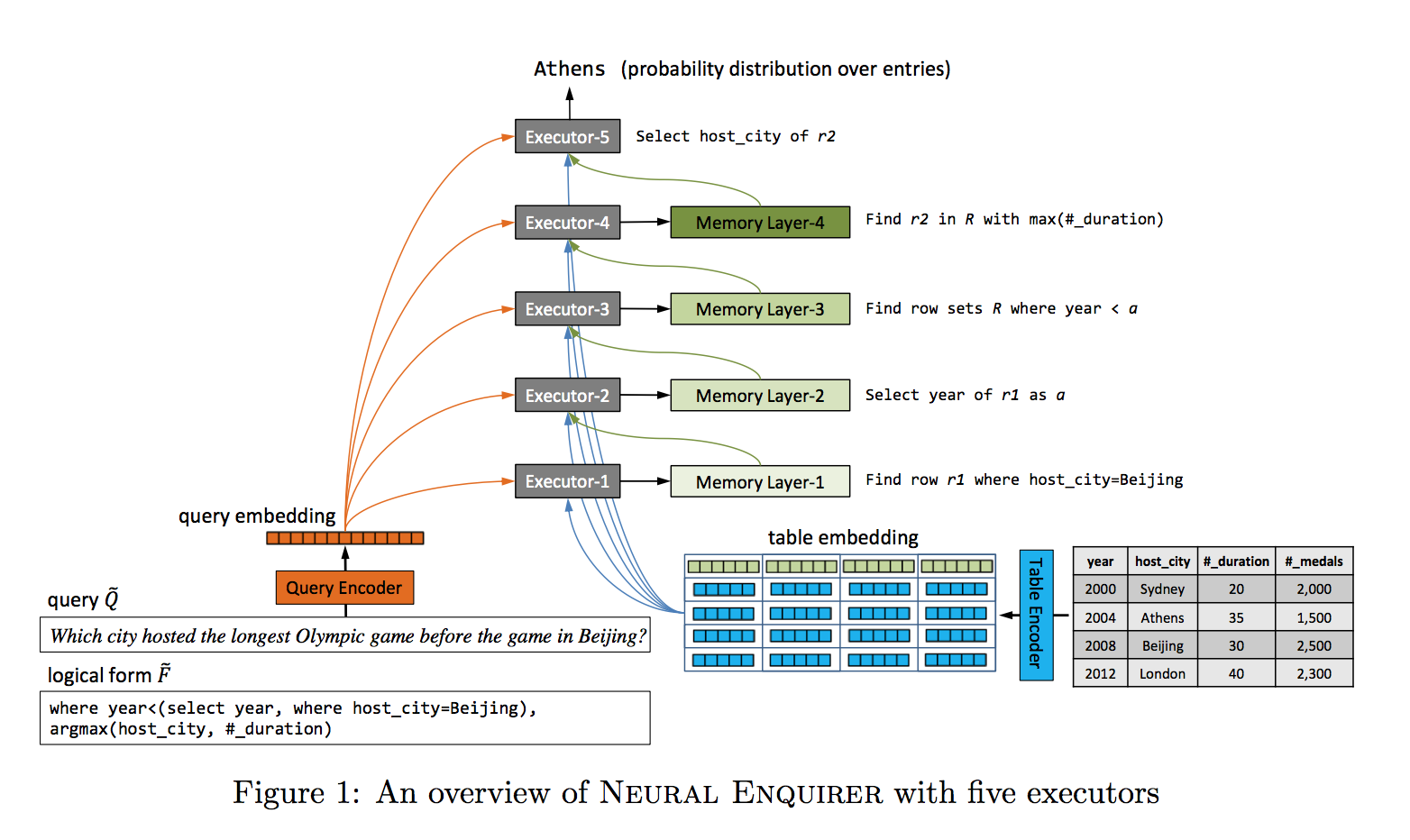

A neural network aka Neural Enquirer(NE) to execute a natural language query on knowledge-base for answer.

vs End-to-End semantic parser

NE is fully differentiable.

bi-direction RNN

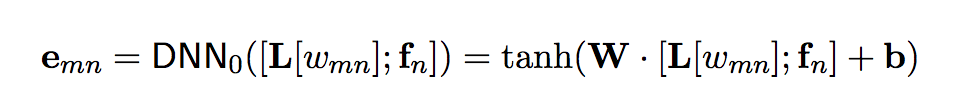

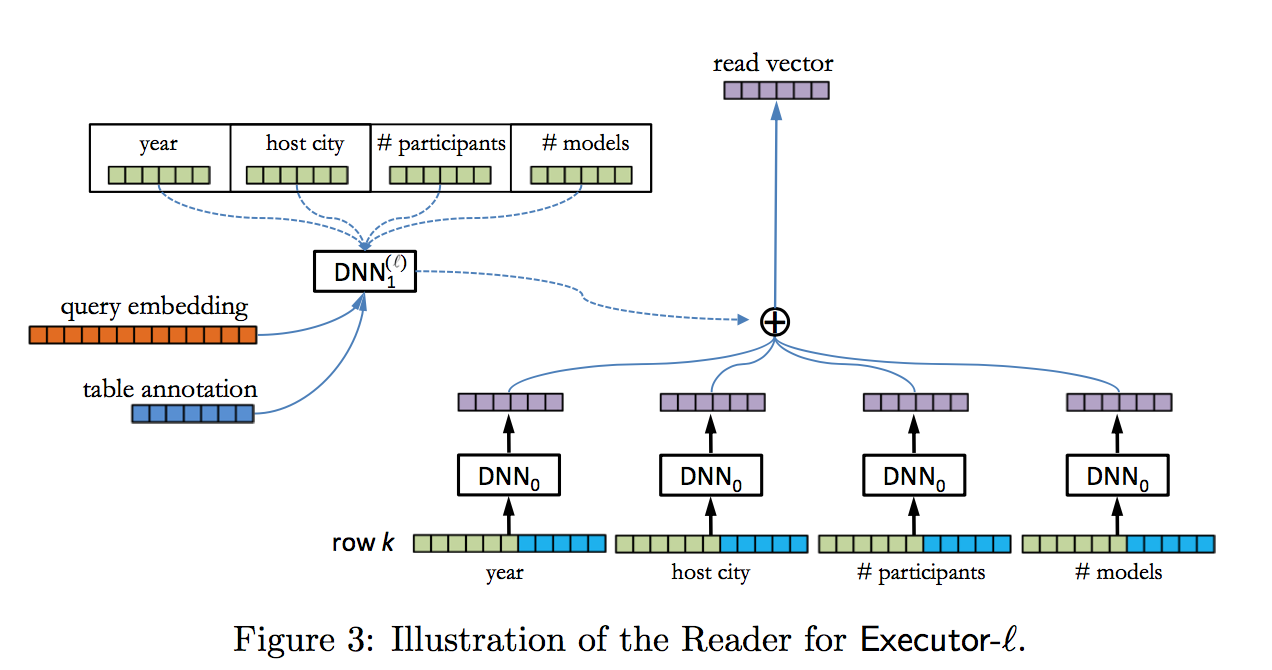

embedding of element at m,n of the table is a one layer non-linearity for a matrix transformation given the contatination of the embedding element at corresponding index with the corresponding column field name embedding.

embedding of element at m,n of the table is a one layer non-linearity for a matrix transformation given the contatination of the embedding element at corresponding index with the corresponding column field name embedding.

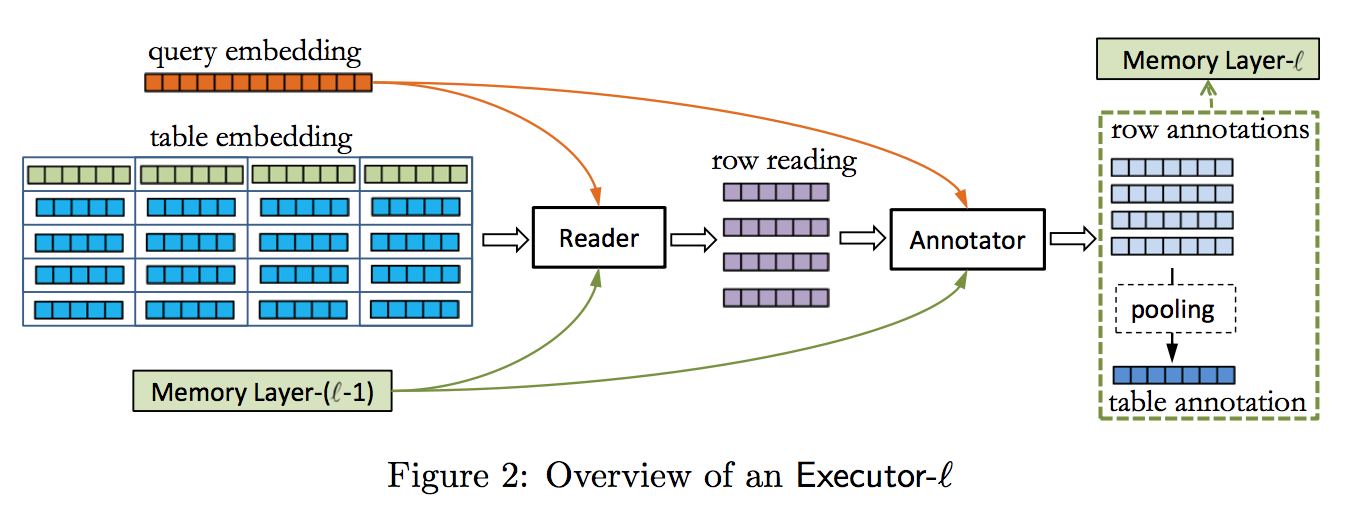

each executor is responsible for one type of operation(select, where, max etc.)

Query is executed as a cascade process of the executers.

####Memory

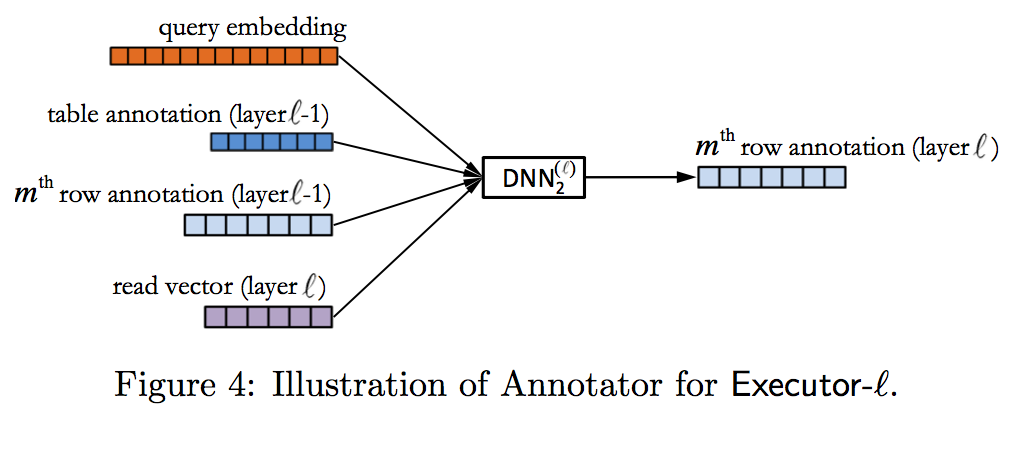

Memory layer is used to store the intermediate result of the executers with each annotator has access to previous temporal-level memory.

attention agnostic to each value in the row

N2N

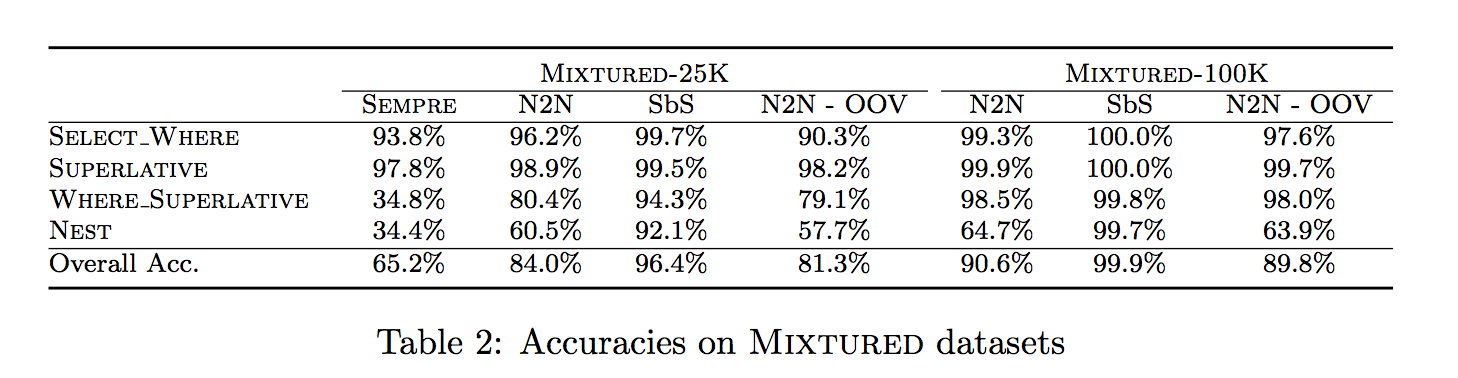

this paper is more like working towards using the table structure(compositional structure) through a N2N training to minimized the draw back in semantic representation of the query sentence embedding.

Dataset: huawei’s own synthetic dataset.(not yet public available)

comparision against SEMPRE:

##My questions

how to optimize the speed if each query need to search the whole table with distributed representation?(aka scalability)