Today I watch the talk Andrew Ng gave at GPU Technology Conference.

The important screenshots are:

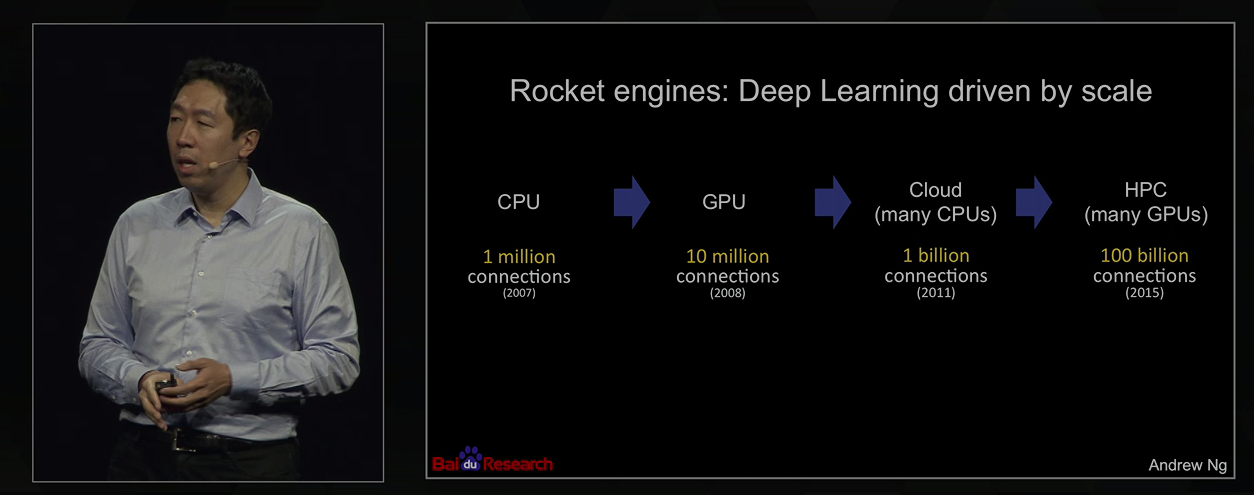

The migrate from cloud computing to HPC is because the synchronizability between computation or the Goal HPC do computation. The main difference between cloud computing and HPC is in cloud computing it tolerate the fault nodes in computation,

and just start another node once some nodes in the cloud fails, this might suitable for some internet services like hosting the websites, but for computing the neural network, it is most of the time matrix computation and really needs every core is on the same page most of the time compared to the cloud’s asynchronous communication in the most of the time.

The migrate from cloud computing to HPC is because the synchronizability between computation or the Goal HPC do computation. The main difference between cloud computing and HPC is in cloud computing it tolerate the fault nodes in computation,

and just start another node once some nodes in the cloud fails, this might suitable for some internet services like hosting the websites, but for computing the neural network, it is most of the time matrix computation and really needs every core is on the same page most of the time compared to the cloud’s asynchronous communication in the most of the time.

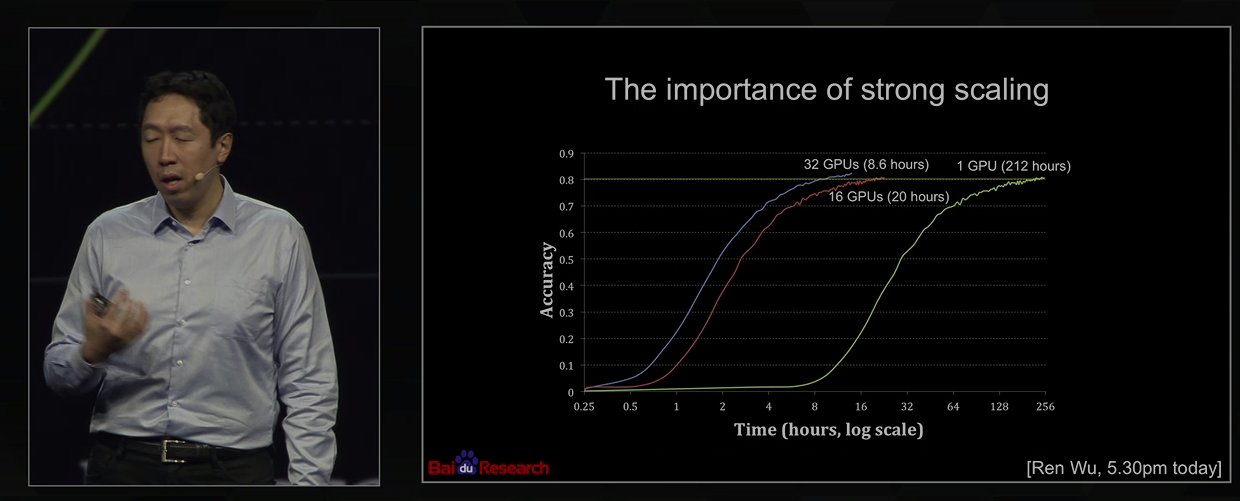

Obviously more GPU will do faster job but where is the sweetspot for the cost of GPUS as well as the cost of Time?

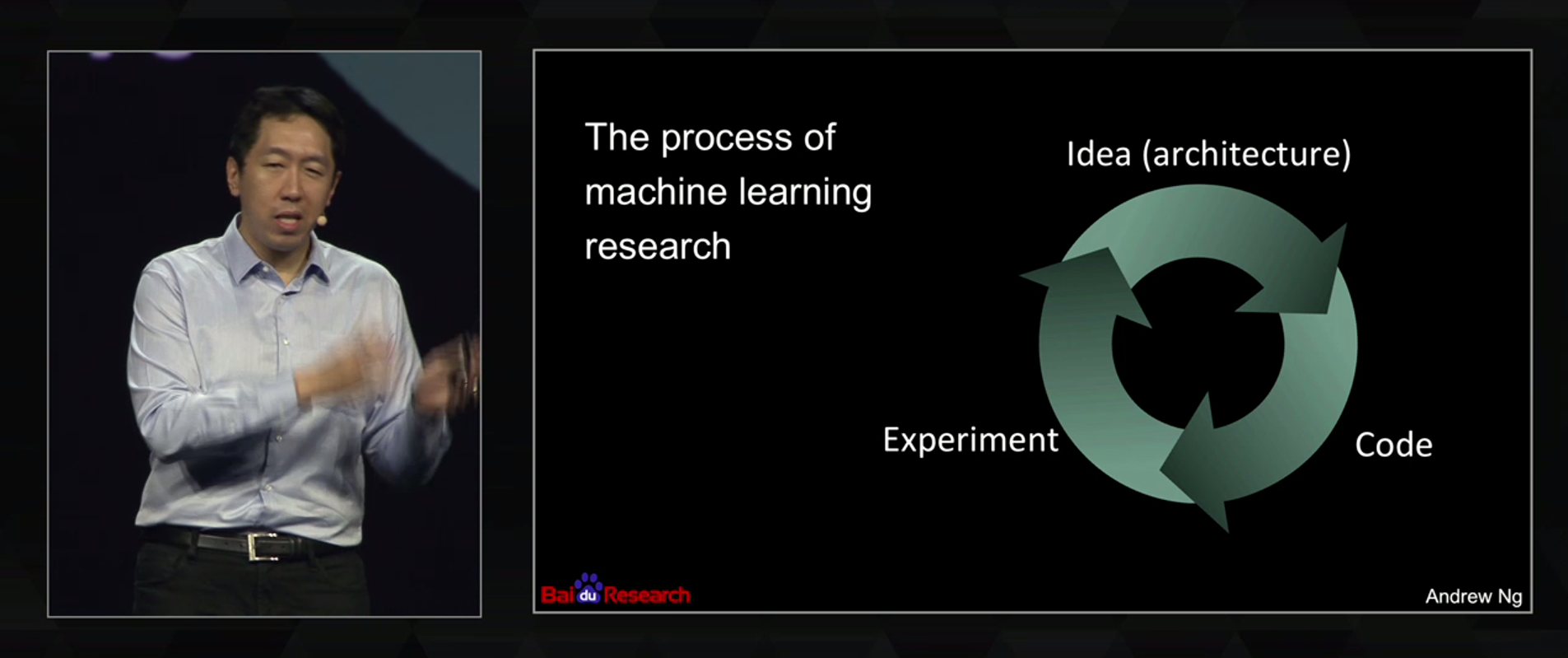

I always believe the best way to learn is by doing it and think carefully what’s happening during the experiments.And find the best resource we could have to explain and solve the problem.In machine learning term is supervised learning for human, we should always try to get the feedback for what we’ve done, and try to talk to people and refer to technology in books and papers that can solve our current problems.

I always believe the best way to learn is by doing it and think carefully what’s happening during the experiments.And find the best resource we could have to explain and solve the problem.In machine learning term is supervised learning for human, we should always try to get the feedback for what we’ve done, and try to talk to people and refer to technology in books and papers that can solve our current problems.

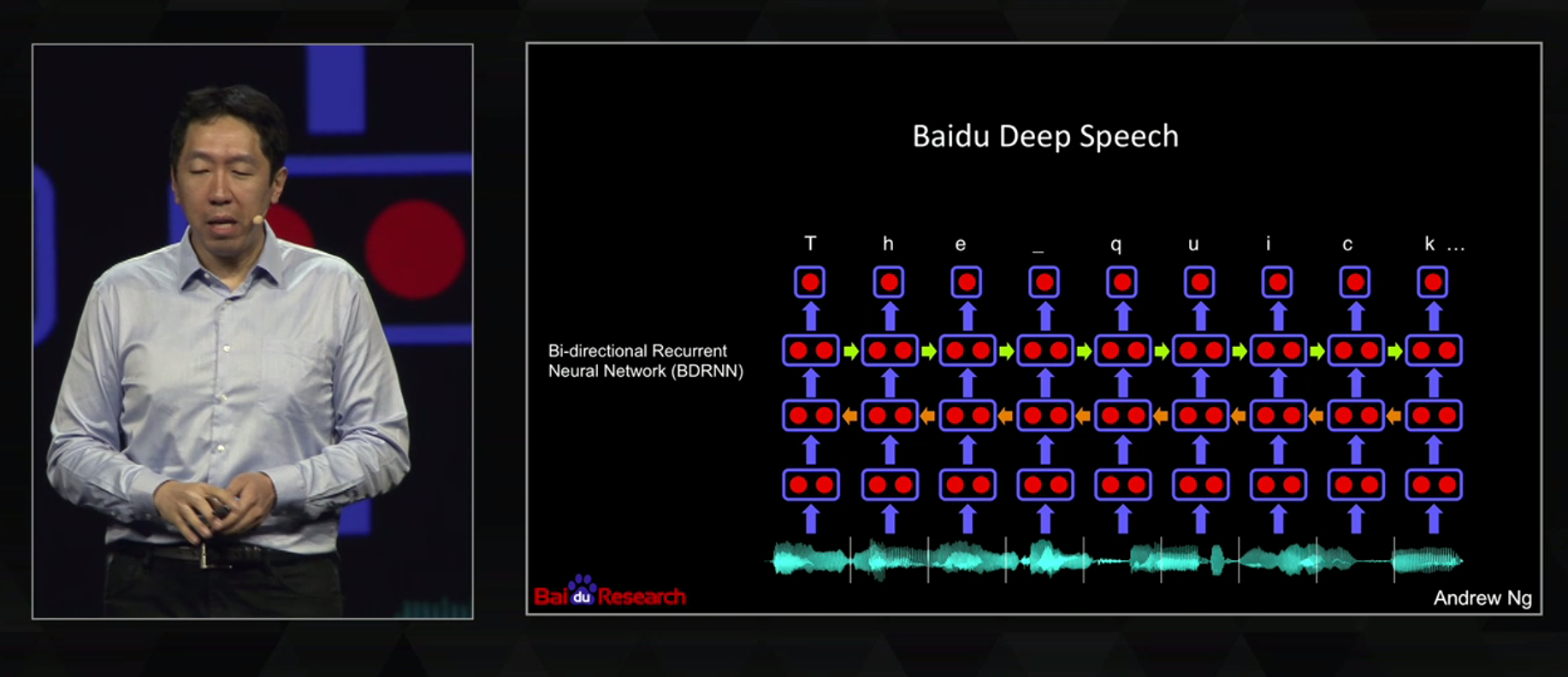

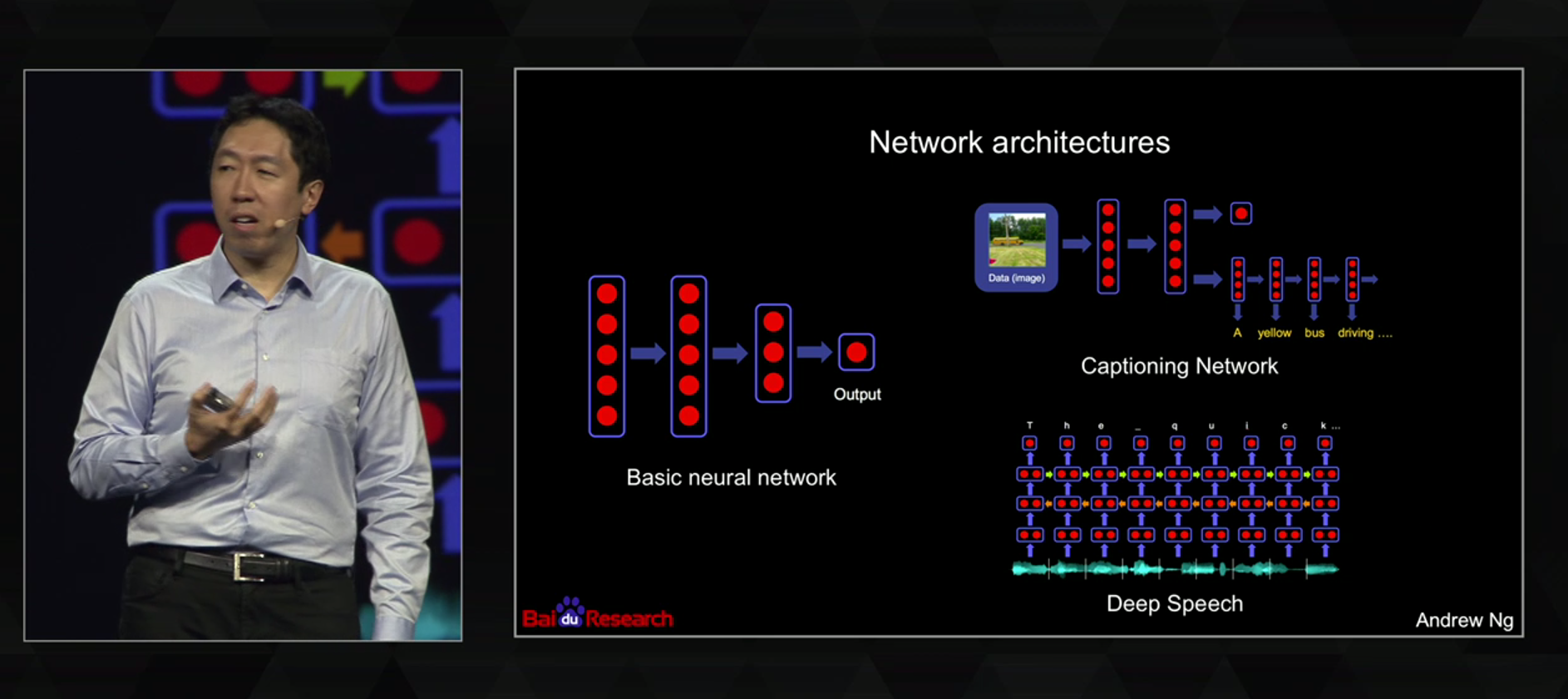

The new promising deep speech structure: a BDRNN. Help neural network’s each recognizing unit better capture what is before and after itself.BTW I should remind myself here BDRNN is the one I should learn during this summer.

The new promising deep speech structure: a BDRNN. Help neural network’s each recognizing unit better capture what is before and after itself.BTW I should remind myself here BDRNN is the one I should learn during this summer.